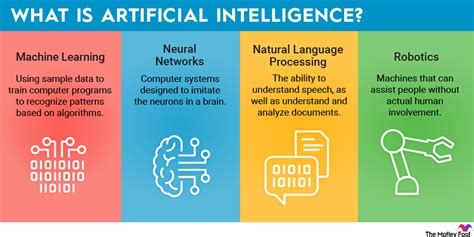

What Is Artificial Learning

Artificial learning, often interchanged with machine learning, is a transformative field of computer science that has revolutionized the way we interact with technology. This powerful concept enables machines and systems to learn and evolve from data, patterns, and experiences without explicit programming. It's a key component of artificial intelligence (AI) and has found its way into numerous industries, reshaping the way we live, work, and innovate.

The Evolution of Artificial Learning

Artificial learning, a subset of AI, has evolved significantly since its early beginnings. It started as a theoretical concept in the 1950s, where researchers aimed to develop algorithms that could learn and improve over time. The term “machine learning” was coined by Arthur Samuel in 1959, and since then, the field has witnessed exponential growth and innovation.

The early algorithms focused on simple tasks like pattern recognition and classification. However, with advancements in computing power and data storage, the scope of artificial learning expanded dramatically. Today, it encompasses a wide range of techniques and applications, from image and speech recognition to natural language processing and predictive analytics.

Key Milestones in Artificial Learning

The journey of artificial learning has been marked by several significant milestones:

- 1980s: Neural networks, inspired by the human brain, gained popularity and were used for complex pattern recognition tasks.

- 1990s: Decision trees and ensemble methods were developed, offering more accurate predictions.

- 2000s: The advent of deep learning revolutionized the field, allowing machines to learn and process data in a more sophisticated manner.

- 2010s: With the explosion of big data, artificial learning techniques became more powerful and efficient, leading to breakthroughs in image and speech recognition.

| Decade | Key Development |

|---|---|

| 1950s | Theoretical concepts and early algorithms |

| 1980s | Neural networks and pattern recognition |

| 1990s | Decision trees and ensemble methods |

| 2000s | Deep learning and complex data processing |

| 2010s | Big data and breakthroughs in recognition tasks |

How Artificial Learning Works

At its core, artificial learning is about enabling machines to learn from data. It involves creating algorithms and models that can identify patterns, make predictions, and improve over time. The learning process typically follows a cyclical pattern:

- Data Collection: The process begins with gathering relevant data, which could be images, text, numbers, or any other form of information.

- Training: The data is then used to train the machine learning model. This involves feeding the data into the model and adjusting its internal parameters to minimize errors.

- Testing: Once trained, the model is tested on new, unseen data to evaluate its performance and accuracy.

- Improvement: Based on the test results, the model is further refined and improved. This process is repeated iteratively until the desired level of accuracy is achieved.

Types of Artificial Learning Algorithms

There are several types of artificial learning algorithms, each designed for specific tasks and use cases. Here are some of the most common ones:

- Supervised Learning: Algorithms learn from labeled data, where the correct output is provided for each input. This is commonly used for classification and regression tasks.

- Unsupervised Learning: Here, algorithms learn from unlabeled data, identifying patterns and relationships without any predefined outputs. Clustering and dimensionality reduction are common unsupervised learning tasks.

- Reinforcement Learning: This type of learning involves an agent interacting with an environment. The agent learns through trial and error, receiving rewards or penalties based on its actions. It’s often used in game playing and robotics.

- Semi-Supervised Learning: A combination of supervised and unsupervised learning, this approach uses a small amount of labeled data along with a large amount of unlabeled data to improve the model’s performance.

Real-World Applications of Artificial Learning

Artificial learning has found its way into numerous industries, enhancing processes and revolutionizing the way we operate. Here are some key areas where its impact is most felt:

Healthcare

In healthcare, artificial learning is used for various tasks, including disease diagnosis, drug discovery, and personalized medicine. For instance, it can analyze medical images to detect tumors or predict disease progression. It also plays a crucial role in developing precision medicine, where treatments are tailored to individual patients.

Finance

The finance industry leverages artificial learning for fraud detection, risk assessment, and algorithmic trading. Machine learning algorithms can analyze vast amounts of financial data, identify patterns, and make accurate predictions, helping financial institutions make better decisions.

Retail and E-commerce

Artificial learning powers personalized recommendations, dynamic pricing, and customer segmentation in retail and e-commerce. It also enables more accurate demand forecasting and inventory management, optimizing the entire supply chain.

Autonomous Vehicles

Self-driving cars and drones rely heavily on artificial learning. These vehicles use machine learning algorithms to perceive their environment, make real-time decisions, and navigate safely. From object detection to path planning, artificial learning is a key enabler of autonomous mobility.

Natural Language Processing

Artificial learning has significantly advanced natural language processing (NLP). NLP applications, such as language translation, sentiment analysis, and chatbots, use machine learning to understand and generate human language, enhancing communication between humans and machines.

Challenges and Ethical Considerations

While artificial learning has immense potential, it also presents several challenges and ethical considerations:

- Data Quality: The performance of machine learning models heavily relies on the quality and quantity of data used for training. Biased or inadequate data can lead to inaccurate or unfair results.

- Explainability: As machine learning models become more complex, it becomes challenging to interpret their decisions. This lack of transparency can hinder trust and adoption, especially in critical applications like healthcare or finance.

- Privacy and Security: With the vast amount of data processed, privacy and security become critical concerns. Ensuring data protection and preventing unauthorized access or manipulation are essential aspects of responsible artificial learning deployment.

- Job Displacement: The automation brought about by artificial learning can lead to job displacement, especially in sectors heavily reliant on repetitive tasks. Retraining and reskilling workers become crucial to mitigate this impact.

The Future of Artificial Learning

The future of artificial learning looks promising, with ongoing research and development pushing the boundaries of what’s possible. Here are some key trends and predictions:

- Increased Adoption: As the benefits of artificial learning become more evident, we can expect wider adoption across industries. From small businesses to large enterprises, machine learning will become an integral part of everyday operations.

- Explainable AI: Researchers are focusing on developing more transparent and interpretable machine learning models. This “Explainable AI” will help address concerns around trust and fairness, making artificial learning more accessible and acceptable.

- Edge Computing: With the growth of IoT devices, edge computing will play a crucial role in artificial learning. Processing data closer to the source will reduce latency and improve efficiency, especially in real-time applications like autonomous vehicles or industrial automation.

- Ethical Frameworks: As artificial learning becomes more prevalent, ethical considerations will become even more critical. Developing clear guidelines and frameworks for responsible AI development and deployment will be essential to ensure a fair and equitable future.

What is the difference between artificial learning and traditional programming?

+In traditional programming, the logic and instructions for a task are explicitly defined by a human programmer. In contrast, artificial learning enables machines to learn and adapt without being explicitly programmed. The machine learns from data and experiences, making decisions or predictions based on that knowledge.

How does artificial learning improve over time?

+Artificial learning algorithms improve through a process called “training.” During training, the algorithm is exposed to a large amount of data and learns to recognize patterns and make predictions. Over time, as more data is fed into the algorithm, it adjusts its internal parameters to minimize errors and improve its accuracy.

What are some potential risks associated with artificial learning?

+While artificial learning offers immense benefits, it also comes with potential risks. These include bias in data or algorithms, privacy concerns with large-scale data processing, and the potential for job displacement as certain tasks become automated. Responsible development and deployment practices are essential to mitigate these risks.