What Is Scraping

Web scraping, often referred to simply as scraping, is a powerful technique that involves extracting data from websites. It has become an essential tool in the digital world, offering numerous benefits and applications across various industries. In this comprehensive guide, we will delve into the world of web scraping, exploring its definition, purpose, and the myriad ways it is utilized to extract valuable insights from the vast ocean of information available online.

The Art of Web Scraping: Unlocking Data’s Potential

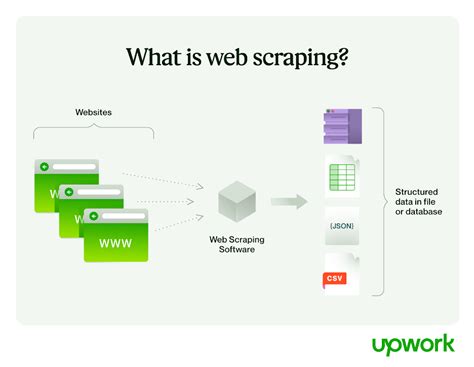

Web scraping, at its core, is the automated process of retrieving and collecting data from websites. It goes beyond simple browsing and copying; it involves sophisticated techniques and tools to extract structured information from web pages. This data can include text, images, links, and even metadata, providing a wealth of knowledge that can be analyzed and utilized for various purposes.

The Purpose of Web Scraping

The primary objective of web scraping is to gather and organize data that is otherwise scattered across numerous web sources. By automating the data collection process, web scraping allows businesses, researchers, and individuals to access and utilize information efficiently and effectively. The extracted data can be used for a multitude of tasks, from market research and price monitoring to content aggregation and data analysis.

For instance, imagine a scenario where a company wants to compare prices across multiple e-commerce platforms. Manual browsing and note-taking would be time-consuming and prone to errors. Web scraping offers a solution by automating the process, enabling the company to gather pricing data accurately and efficiently. This data can then be analyzed to make informed decisions regarding pricing strategies and market positioning.

Key Applications of Web Scraping

- Market Research: Web scraping is a valuable tool for market analysts and researchers. It enables them to gather data on competitors’ products, pricing, and customer reviews, providing valuable insights for strategic decision-making.

- Price Monitoring: Businesses can utilize web scraping to track pricing trends, identify price discrepancies, and ensure their pricing remains competitive. This is particularly beneficial for e-commerce businesses operating in dynamic markets.

- Content Aggregation: Web scraping is commonly used to aggregate content from various sources. News organizations, for example, can scrape multiple news websites to create comprehensive news feeds or articles.

- Data Analysis: The extracted data can be analyzed to identify patterns, trends, and correlations. This analytical approach is valuable for businesses, researchers, and data scientists, helping them make data-driven decisions.

A Real-World Example: Retail Price Monitoring

Consider a retail company selling electronic gadgets. To stay competitive, they need to regularly monitor the prices of similar products offered by their competitors. Web scraping comes into play by automating the process of collecting price data from various e-commerce platforms. This enables the company to quickly identify the lowest prices for each product, helping them adjust their pricing strategies accordingly.

| Product | Competitor A | Competitor B | Competitor C |

|---|---|---|---|

| Smartphone X | $599 | $629 | $589 |

| Laptop Y | $999 | $1099 | $949 |

| Tablet Z | $299 | $329 | $289 |

The Technical Aspect: How Web Scraping Works

Web scraping involves several key components and processes. Understanding these technical aspects is crucial for both developers and users of web scraping tools.

The Role of HTTP Requests

Web scraping begins with sending HTTP requests to web servers. These requests are similar to the ones made when browsing the web manually. However, in web scraping, the requests are automated and often involve specialized techniques to retrieve specific data.

Parsing HTML and CSS

Once the web server responds to the HTTP request, the scraper needs to parse the HTML and CSS code of the web page. This process involves understanding the structure of the page and extracting the desired data. HTML parsers, such as BeautifulSoup in Python, are commonly used for this purpose.

Data Extraction Techniques

There are various techniques for extracting data from web pages. Some common methods include:

- Regular Expressions: These are powerful tools for matching and extracting specific patterns of text.

- DOM (Document Object Model) Parsing: DOM allows for more precise data extraction by understanding the hierarchical structure of the web page.

- API Integration: Some websites provide APIs (Application Programming Interfaces) that allow developers to access their data directly, bypassing the need for scraping.

Handling Dynamic Content

Web pages often contain dynamic content that changes based on user interactions or real-time data. Scraping dynamic content requires additional techniques, such as using JavaScript to render the page or employing browser automation tools like Selenium.

Data Storage and Management

After extracting the data, it needs to be stored and managed effectively. Common data storage options include CSV files, Excel spreadsheets, databases (e.g., MySQL, PostgreSQL), and cloud storage solutions (e.g., AWS S3, Google Cloud Storage).

Ethical Considerations and Best Practices

While web scraping offers immense benefits, it is essential to approach it with ethical considerations in mind. Here are some best practices to follow:

Respect Website Terms and Conditions

Always review and adhere to the terms and conditions of the websites you are scraping. Some websites explicitly prohibit web scraping, and ignoring their rules can lead to legal issues.

Rate Limiting

To avoid overloading web servers, implement rate limiting. This means setting a limit on the number of requests sent to a website within a certain time frame. This practice ensures you don’t disrupt the website’s performance.

Handle Captchas and Bot Detection

Websites often use captchas and bot detection mechanisms to differentiate between human users and bots. When encountering captchas, consider using human-assisted captcha solving services or implementing image recognition techniques.

Avoid Deep Linking

Deep linking refers to scraping pages that are not directly linked from the website’s homepage. This practice can be seen as intrusive and may violate website policies. It’s best to stick to publicly accessible pages.

Use Proxy Servers

Proxy servers can help distribute your requests across multiple IP addresses, making your scraping activities less noticeable. This reduces the risk of being blocked by websites.

The Future of Web Scraping: Innovations and Challenges

The field of web scraping is constantly evolving, driven by advancements in technology and changing online dynamics. Here’s a glimpse into the future of web scraping:

Artificial Intelligence and Machine Learning

AI and ML algorithms are being integrated into web scraping tools, enabling more intelligent and accurate data extraction. These technologies can learn and adapt to the changing structures of web pages, making scraping more efficient.

Cloud-Based Scraping Services

Cloud-based scraping services offer scalability and flexibility. These services provide access to powerful computing resources, allowing users to handle large-scale scraping projects efficiently.

Overcoming Anti-Scraping Measures

As websites implement more sophisticated anti-scraping measures, the challenge for web scrapers increases. Developing techniques to bypass these measures while maintaining ethical practices is an ongoing pursuit.

Web Scraping as a Service

With the increasing demand for web scraping, specialized companies are offering web scraping as a service. These providers handle the technical aspects, allowing businesses to focus on utilizing the extracted data.

Conclusion

Web scraping is a powerful tool with a wide range of applications, from market research to data analysis. By automating the process of data extraction, it enables businesses and individuals to make informed decisions based on accurate and up-to-date information. As the digital landscape continues to evolve, web scraping will play an even more critical role in unlocking the potential of online data.

What are some common use cases for web scraping?

+

Web scraping has a wide range of applications. Common use cases include market research, price monitoring, content aggregation, and data analysis. It is used by businesses, researchers, and individuals to gather and utilize data from various online sources.

Is web scraping legal?

+

The legality of web scraping depends on various factors, including the jurisdiction and the terms and conditions of the websites being scraped. It’s crucial to respect the website’s policies and avoid scraping data that is explicitly prohibited. Always consult legal experts for specific guidance.

What are some challenges in web scraping?

+

Challenges in web scraping include dynamic content, anti-scraping measures implemented by websites, and the need for continuous updates to scraping tools as websites change their structures. Additionally, ensuring ethical practices and respecting website policies can be complex.

How can I get started with web scraping?

+

Getting started with web scraping involves learning programming languages like Python, understanding web technologies (HTML, CSS, and JavaScript), and choosing the right tools and libraries for your specific needs. There are also online courses and tutorials available to guide beginners.

Are there any alternatives to web scraping?

+

Yes, alternatives to web scraping include using APIs provided by websites, which offer a more direct and often more efficient way to access data. Additionally, data aggregation platforms and web services that specialize in data collection can be considered as alternatives.